One of the projects I work on is CC: Tweaked, a mod which adds programmable computers to Minecraft. Aside from computers, CC: Tweaked also provides several peripherals including monitors, which allow you to draw text and images to a blocky screen.

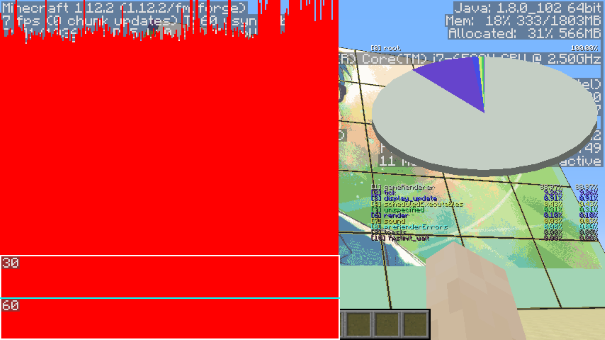

As the mod has developed, people have begun to do more and more with monitors, and made them increasingly larger. Recently, someone sent me a screenshot of 35 monitors stitched together1 to form a HD monitor, with an effective resolution of 1968x12152. However, when I came to test on my own world, I found the performance was abysmal, averaging 7-10fps.

While in small numbers, monitors have relatively little impact, they do not end up scaling well, and even 30 full-sized monitors can drag the game to a crawl. It was clear some improvements needed to be done.

Before we get into the changes made, it’s worth discussing what’s going on internally. Each monitor is made up from a big 2D array of cells. Each cell then has three properties: the character to draw (such as A), the colour of that character, and the background colour. The monitor also stores a palette, which maps the colour of each cell (ranging 0-15) to an actual RGB value.

The current renderer builds up an array containing all vertex data. Each cell becomes two quads - one containing the background, and another containing the actual character. This is then rendered using a mixture of glVertexPointer and glDrawArrays, using display lists to cache monitors when they do not change.

On some graphics cards, this performs incredibly well3. However, the screenshot above demonstrates this is not the case on my Intel integrated graphics.

Attempt #1: Vertex Buffers

Historically, Minecraft has avoided using any recent OpenGL features (anything after OpenGL 1.3), in order to ensure it can run on a wide range of computers. This has changed recently, with some OpenGL 2.1 features being optionally supported and, as of Minecraft 1.15, required.

The most interesting of these features are Vertex Buffer Objects or VBOs. They’re a staple of modern OpenGL, and an obvious choice here. Our rendering code is just building up a static array of vertices after all! Replacing the renderer to use VBOs produces a dramatic performance improvement.

In the above screenshot, the monitor is not changing at all. However, if the monitor were to be changing every frame, we’d have a very similar graph to our display lists again.

Every cell on our monitor requires 192 bytes to describe in our vertex buffer. This means every change of all 30 monitors sends dozen of megabytes to the GPU, dropping multiple frames. In order to get faster, we need to start sending less information.

Attempt #1.5: Merging quads

One obvious, albeit minor optimisation is to reduce the number of quads we send.

In the above image, you’ll notice that the background is often one solid block. Instead of rendering one quad for each cell, we can merge adjacent ones into a single larger quad. This reduces both the amount of information sent and the number of vertices drawn, fantastic!

While this definitely reduces the amount of data sent, unfortunately it’s a tiny drop in the ocean, and so doesn’t noticeably improve performance.

Attempt 2: Texture Buffers

One fantastic suggestion, put forward by Lemmmy and Lignum on GitHub, was to use an OpenGL 3.1 feature known as Texture Buffers (or TBOs). Instead of sending vertices to the GPU, we send the raw terminal data and use a shader to convert this into an image.

This means that instead of sending 192 bytes for each cell, we only need to send 3 - the character and text and background colours. Furthermore, instead of drawing two quads for each cell, we only draw one for the entire terminal.

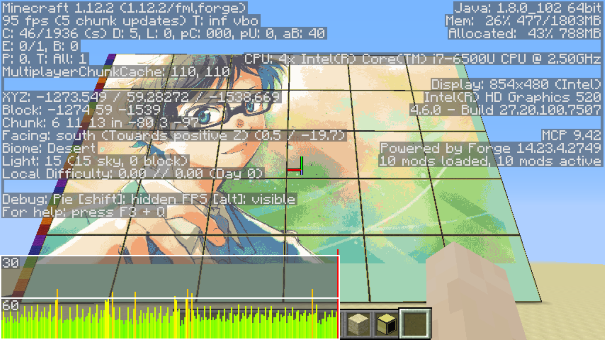

Trying this out yields fantastic results. Our terminal from before now renders at a whooping 105fps (this is Intel integrated graphics remember), and rarely drops below 60, even when updating.

The obvious question from here, is how much can we scale it up before it becomes unusable again? It turns out, pretty far. On my machine, I’m able to quadruple the number of monitors, and remain at 50fps when the monitors are static (40-50fps when changing every second).

These 120 monitors4 have an effective resolution of 3972x2330, which I’m fairly sure makes it a 4k display (and certainly a higher resolution than my actual monitor). The fact that this works as well as it does is fairly astounding5.

This system is not without its caveats. Due to our use of shaders, it doesn’t appear to play well with Optifine - monitors will render transparently. It’s possible to switch between systems within CC’s config, should you need to. That said, it’s still an incredible improvement from where we started.

I’d like to give a massive shout of thanks to Lemmmy and Lignum for their help in designing, testing and reviewing these changes. There is no way I would have achieved this on my own. As always, contributions on GitHub are always welcome!

Apologies for the large number of anime stills in this post. Due to their small colour palette, they make fantastic demonstration images.↩︎

The “actual” resolution is 984x405. However, each character can be split into a 2x3 image using Unicode’s block elements, which gives us an “effective resolution” for drawing images.↩︎

For instance, on NVIDIA chips this appears to have identical performance to VBOs.↩︎

This is actually the maximum number of monitors I can fit before hitting Minecraft’s entity render limit.↩︎

That said, the network traffic of this is horrific. We don’t apply any compression, so it sends a 3MB packet every time the monitor changes. Please don’t go building this on a server.↩︎